|

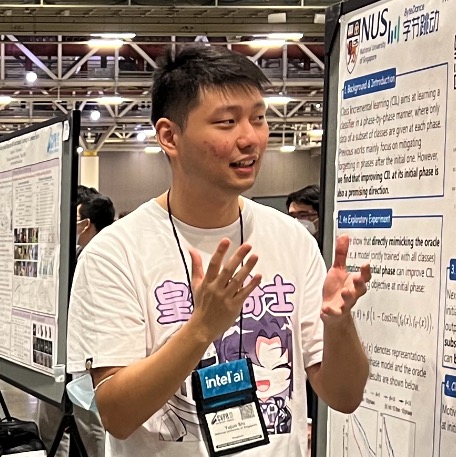

Greetings! My name is Yujun Shi. I'm currently a member of technical staff at Microsoft AI (Multimodal). I obtained my PhD in ECE, National University of Singapore, advised by Professor Vincent Y. F. Tan. Previously, I received my B. Eng in Computer Science in Nankai University. Email / Google Scholar / Github / Twitter / Linkedin It's about a man who has nothing, who risks everything, to feel something.--Jessica Day |

|

|

|

|

Yujun Shi, Jun Hao Liew, Hanshu Yan, Vincent Y. F. Tan, Jiashi Feng ICML, 2025 [arXiv link] [project page] [code] Lightning fast (~1s) and accurate drag-based image editing trained on videos! |

|

Yujun Shi, Chuhui Xue, Jun Hao Liew Jiachun Pan, Hanshu Yan Wenqing Zhang, Vincent Y. F. Tan, Song Bai CVPR, 2024 [arXiv link] [project page] [video] [code] We enable "drag" editing on diffusion models. By leveraging large-scale pre-trained diffusion models, we greatly improve the generality of "drag" editing. |

|

Yujun Shi, Jian Liang, Wenqing Zhang, Chuhui Xue, Vincent Y. F. Tan, Song Bai ICLR, 2023, TPAMI, 2024 [arXiv link] [code] We find dimensional collapse of representations is one of the culprit behind the performance degradation in heterogeneous Federated Learning. We propose FedDecorr to mitigate such problem and thus facilitating FL under data heterogeneity. |

|

Yujun Shi, Kuangqi Zhou, Jian Liang, Zihang Jiang, Jiashi Feng, Philip Torr, Song Bai, Vincent Y. F. Tan CVPR, 2022 [arXiv link] [bibtex] [poster] [video] [code] We propose a Class-wise Decorrelation regularizer that enables CIL learner at initial phase to mimic representations produced by the oracle model (the model jointly trained on all classes) and thus boosting Class Incremental Learning. |

|

Zihang Jiang, Qibin Hou, Li Yuan, Daquan Zhou, Yujun Shi, Xiaojie Jin, Anran Wang, Jiashi Feng NeurIPS, 2021 [arXiv link] [code] Instead of only supervising the classification token in ViT, we propose a novel offline knowledge distillation method that supervises all output tokens and significantly boost ViT performance. |

|

Li Yuan, Yunpeng Chen, Tao Wang, Weihao Yu, Yujun Shi, Zihang Jiang, Francis E. H. Tay, Jiashi Feng, Shuicheng Yan ICCV, 2021 [arXiv link] [code] By injecting the missing local information into vanilla ViT with our proposed T2T module, we achieve decent ImageNet classification accuracy when training ViT from scratch. |

|

Yujun Shi, Li Yuan, Yunpeng Chen, Jiashi Feng CVPR, 2021 [arXiv link] [bibtex] [talk @ ContinualAI] [code] By viewing learning algorithm as a channel (input data and output model parameters) and using chain rule of mutual information, we propose a novel algorithm called Bit-Level Information Preserving (BLIP) to combat forgetting. |

|

Yun Liu, Yu-huan Wu, Peisong Wen, Yujun Shi, Yu Qiu, Ming-Ming Cheng TPAMI [paper link] [code] By leveraging multi-level information, we achieve SOTA results on weakly-supervised instance/semantic segmentation. |

|

|

|

National University of Singapore, Singapore PhD in Machine Learning (Jan. 2021 to present) |

|

Nankai University, Tianjin, China B. Eng in Computer Science (2015 to 2019) GPA: 92.14/100, Rank: 1/93 |

|

Changsha First Middle School, Changsha, China High School (2012 to 2015) |

|

EE6139 Information Theory and its Applications EE5137 Stochastic Process IE6520 Theory and Algorithms for Online Learning |

|

EE2211 Introduction to Machine Learning (Fall 2022) EE2012A Analytical Methods in Electrical and Computer Engineering (Spring 2022) |

|

National Scholarship Award, PRC (2016) Nankai First Class Scholarship (2017, 2018) |

|

CVPR 2023, ICCV 2023, CoLLAs 2023 TNNLS (2022), CoLLAs 2022 |

|

I'm always more than willing to give a talk about my works and my field of study! Feel free to reach out :) Introduction to Federated Learning @ UMass Amherst, host: Hong Yu Continual Learning via Bit-Level Information Preserving @ ContinualAI, host: Vincenzo Lomonaco |

|

|